Can Mutual Sabotage Forestall an Unbridled Race to Superintelligence?

Powerful AI is a hard thing to deter. Complicated red lines, such as an intelligence recursion, do not help.

If there’s one fact we cannot dispute, it’s that AI simply keeps getting better. Not long ago, it used to be that AI couldn’t write coherent paragraphs, or refactor code, or follow multi-step instructions. Those days already feel distant. The most powerful models today already match some of us at what we do best. And they will likely continue to improve, since experts say the length of tasks AI can do is doubling every seven months. At that pace, we’re probably on the cusp of achieving artificial general intelligence (AGI)—AI systems as capable as humans at most economically valuable work.

Frontier AI labs echo this projection. The top brass at Anthropic, OpenAI, and Google Deepmind generally expect AGI within 2–5 years. There’s good reason to doubt them, as they have clear incentives to hype their own products. But experts outside these labs also share similar timelines. Yoshua Bengio and Geoffrey Hinton, pioneers of deep learning, both say AGI may arrive in 5 years. Even amongst non-believers who project longer timelines, many now expect AGI to arrive sooner than they had previously envisaged. No one knows precisely when. But if these shrinking timelines are anything to go by, superintelligence—the more radically capable version of AGI that far surpasses human capabilities across all cognitive domains—might be closer than we think.

If superintelligence is attained, it will redefine the limits of possibility and could upend the global balance of power. Governments are waking up to this double-edged prospect, entrenching themselves deeper in the pursuit of powerful AI systems. The United States is expending multiple policy levers to stymie China’s development of advanced AI while ramping up its own efforts. Recent US export controls that prevent China from accessing AI chips—and semiconductor manufacturing equipment needed to produce such chips—are so severe they are decried even by America’s own chip designers. China, meanwhile, has resorted to a creative array of smuggling tactics to maneuver US chip restrictions and lay hold of advanced GPUs that help it advance its AI capabilities.

At the same time, both countries are making heavy investments into AI militarization. The Pentagon has struck military deals with OpenAI, Anduril and Scale AI, while talks of a US Manhattan Project for AGI is being peddled by some in government.

Though there is hardly any evidence of the same race-to-AGI narrative playing out within China, China notably wants to be the global leader in AI by 2030 and continues to invest heavily into AI-enabled weapons systems. I’d think that wanting to lead in an endeavor where your main opponent is gearing up to race ahead probably nudges you to hurry up too. But regardless of whether my sentiment holds true for China’s AI ambitions, it’s hard to shake the thought that powerful AI will become a major front in the broader US-China power struggle and possibly disintegrate into a full-scale arms race.

In summary, we are faced with: a new technology, a geopolitical power tussle, and potential gains of untold proportions. The situation seems oddly familiar; it’s almost like we’ve been here before.

Cold War Flashbacks

After the US announced itself as a nuclear power at the tail end of World War II, the USSR raced to catch up. No surprises there. Both nations were entering a contest for global dominance: the Cold War. Not long after, the Soviets too heralded their attainment of nuclear power by detonating their first atomic bomb in 1949.

By the time both nations had strategic bombers that improved their ability to deliver nuclear strikes against the other, the US adopted a policy of instant retaliation: should the Soviets invade US allies in Europe, America would ready a massive atomic attack in response. By 1962, when the mutual development of ballistic missile submarines made it clear that each side would be destroyed by the other in a nuclear confrontation, US Defense Secretary Robert McNamara formally welcomed into US defense policy what would come to be known as Mutual Assured Destruction (MAD).

The logic of MAD is simple. Each side has enough nuclear weaponry to destroy the other. It is near impossible for a first-strike attack by the aggressor to destroy the complete nuclear arsenal of the aggressed. This means that the aggressed is assured to retaliate with equal or greater force after absorbing a first-strike. Often, the retaliatory strike is fail-deadly. So it will not matter that the aggressed is wiped out by a decapitation strike, retaliation will happen nonetheless. As each side knows that initiating a nuclear strike would also inevitably lead to their own demise, they are deterred from striking first by the rational fear of assured destruction. The result? A stand off. A stable but tense spell of peace.

In the face of potentially catastrophic AI systems and rising US-China competition, the Cold War success of MAD is being recounted by some to suggest that a deterrence theory may again help us avoid existential-scale catastrophe. Superintelligence Strategy, a paper by Dan Hendrycks, Eric Schmidt and Alexandr Wang, presents the most developed version of this idea so far: the theory of Mutual Assured AI Malfunction (MAIM). This post shares my thoughts on why using an intelligence recursion as the redline in the deterrence regime of MAIM flaws the framework.

Deterrence in the Age of AI

Superintelligence Strategy argues that a race to superintelligence can threaten global security and stability in two ways:

A state that possesses a highly capable AI poses a direct threat to the survival of others. A close-enough historical parallel is America’s status as a nuclear hegemon from 1945 until the Soviets achieved parity in 1949, during which the security of many states depended uneasily on American goodwill.

A state racing to develop superintelligence and attain superiority over others may, in prioritizing speed over caution, lose control of powerful AI (either to rogue actors or a misaligned AI system itself), thereby endangering global security.

In order to preserve themselves against both eventualities, states may sabotage or “MAIM” rival AI projects that are deemed “destabilizing” or too close to superintelligence. Think of it as one nation saying to others, “if you attempt to build AI systems that might give you strategic hegemony or destabilize the balance of power, we will make sure you fail.” States will likely be deterred from building such systems of unacceptable capabilities because of the assurance of sabotage, while those who go ahead regardless will face MAIM attacks that ensure they fail. By preventing any single nation from racing towards AI-enabled dominance, we may maintain global stability and curtail many loss of control scenarios, the paper claims.

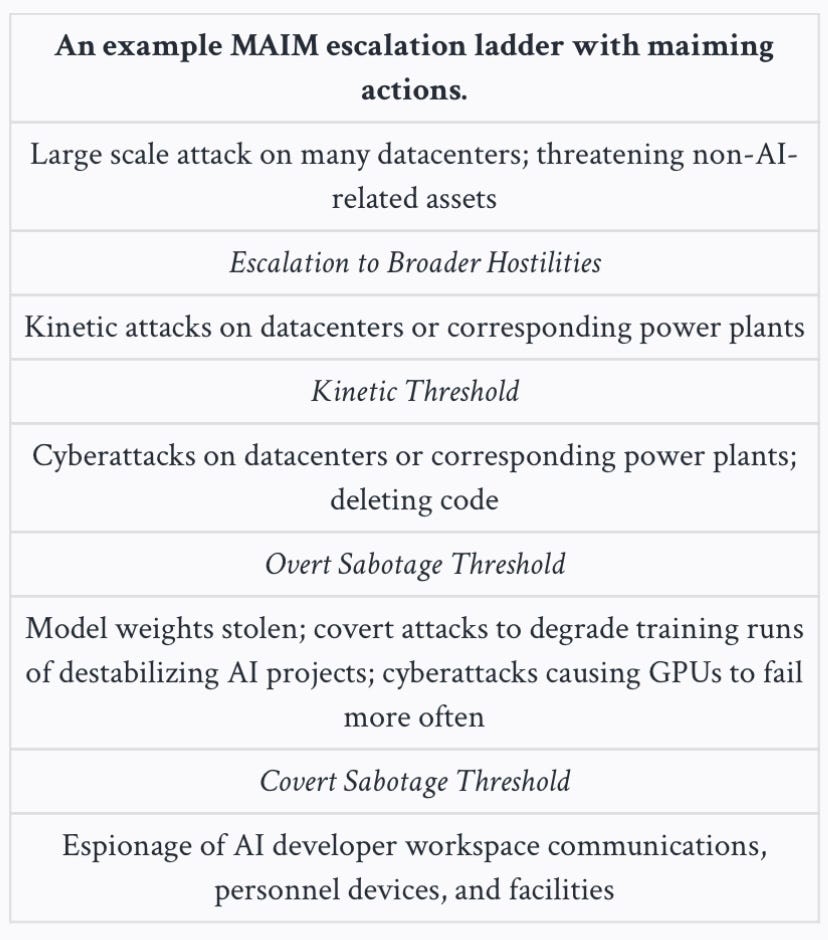

How would states sabotage rival projects?

The paper suggest that states may use a series of actions listed in the ladder above. Ideally, states would begin with less hostile actions at the lower rungs of the ladder, like using spies to tamper with model weights, and potentially escalate to more hostile methods, depending on the perceived scale of risk and reluctance by the rival to yield to the first round of maim attacks.

What’s the cue to attack?

Here’s where things get interesting. The essence of MAIM is to prevent states from attaining highly powerful systems that give them a strategic monopoly. But how do we ascertain that a state is actively working towards this? And importantly, how to tell when they are on the verge of achieving it, so that a timely attack that nullifies their effort is launched before the window for sabotage closes? This would be the redline, the crossing of which signifies that a state is on the brink of achieving superintelligence and others must intervene. With MAD, the red line is simply a nuclear strike. If the Soviets attacked the US or was demonstrably initiating one, they had crossed the red line, and would then face retaliation from the US.

With MAIM, this redline is difficult to define. In Superintelligence Strategy, this redline is an “intelligence recursion.” An intelligence recursion is simply fully automated AI research and development. Think of thousands of AIs autonomously carrying out AI research to build a more capable AI. Today, AI research and progress is largely driven by human researchers, though advanced AI, to the extent of their current capabilities, are now assisting parts of the R&D pipeline. But AI-assisted R&D differs dramatically from this intelligence recursion we speak of. Here’s how.

Imagine we develop an AI model that autonomously performs the task of AI research at a world-class level. AIs are already outcompeting human researchers at some of the skills needed to automate AI research, so this isn’t just sci-fi. We could copy our world-class model a hundred thousand times, and we’d have a large team of 100,000 automated AI researchers working round the clock. This is scientifically feasible, we wouldn’t need to retrain the copied models and incur the initial time and computational costs again.

Some evidence show that the computing power needed to train a frontier AI model will suffice to run hundreds of thousands of copies of the same model in parallel. So all we need do is run the thousands of them and task them with advancing frontier capabilities. Fortunately for us, these AI copies are capable of forming a “hive mind”, so that whatever one copy learns is instantly known to all.

As machines, they’d function expeditiously faster than the best human researchers, with no need to sleep or take breaks. With AI working at, say, 20-35x the pace of a human researcher, the result of 100,000 AIs conducting autonomous and collective AI research could compress a decade of AI discovery into around a year. Even if the results are not so seismic, we could repeat the process with the newly engineered AI and iterate again and again until we are several orders-of-magnitude ahead of where we started.

This intelligence recursion would place us years ahead of any rival, or—in the most successful case—trigger an intelligence explosion that ushers in powerful breakthroughs that surpass human comprehension, including a superintelligence itself. It is this clear link between intelligence recursion and the emergence of superintelligence that justifies Hendrycks’ adoption of recursion as the redline, because, surely, a fleet of world-class AIs recursively improving themselves is the most credible path to superintelligence.

The Red Line Problem

I feel obliged to say that anyone reading this and doubting the plausibility of so-called intelligence recursion isn’t exactly wrong to do so. There are indeed intuitive grounds for skepticism. For example, how easy (or difficult) would it be to make the creative processes of thousands of AI copies sufficiently diverse, so that their numerical strength actually translates into meaningful acceleration of algorithmic progress? What about compute? Can we realistically satisfy the compute costs required to run experiments for hundreds of thousands of AIs making algorithmic discoveries, especially at a time when ever-scarce compute resources would also be needed to serve the growing inference demands of consumers eager to benefit from newly found AI capabilities?

I’m not sure what the definitive answers to these questions are, although others have written at length to address some of them. For now, I am content with supposing—as many industry experts do—that these concerns are surmountable and that the intelligence recursion could happen at this scale. But even if we suppose so, why might the recursion still be flawed as the red line in a deterrence strategy that aims to prevent states from seeking to build destabilizing AI projects?

1. The red line has to be a line

How many AIs working on automated AI research constitute an intelligence recursion? There’s no clear answer. I’ve used 100,000 here for illustration. Superintelligence Strategy illustrates with 10,000. In reality, it might take much less or much more to potentially build superintelligence. This ambiguity is harmful for agreeable deterrence. What one state purports to be a harmless effort to scale AI capabilities for civilian or commercial applications may be interpreted by the other as a conscious attempt to grab strategic dominance. Traditional differences in risk appetites across countries may also increase the likelihood of misinterpretation. Without a clear definition, worst-case assumptions may result in false positives that provoke preemptive attacks and cycles of reprisal.

A natural counter-argument here would read, “Why not just agree on a precise threshold?” But, as I will discuss later, the likelihood of consensus-building towards enabling sabotage seems low.

2. Intelligence explosion might take years—or just days

Hendrycks’ adoption of an intelligence recursion as the red line seems to rest on the presumption that the recursion would take months, and therefore be discernible over time. This is far from certain. It could happen rapidly, such that by the time it’s detected (if detected at all), it may already be too late for sabotage. For MAIM to work in such a fast takeoff scenario, monitoring and sabotage operations would need to be executed with perfection.

A fast takeoff scenario would allow very little margin for error in terms of sabotage. Yet, unlike nuclear retaliation, it is uncertain whether maim attacks can guarantee the level of precision that would be required. Nuclear responses are foolproof. The same cannot be said for attempting to steal model weights, contaminate data or even launch cyberattacks. Fortifications against these are much easier than against a nuclear strike. It’s possible that these attacks carry more potency than I suggest, especially with recent advances in cyberoffense capabilities. But technical potency is not enough in deterrence. Credibility is perhaps more important. The deterred has to believe that the attacks would succeed in sabotaging their attempts, so that they are dissuaded from attempting to race to superintelligence altogether.1

I am not convinced that the attacks listed above have this level of credibility from a state perspective. They may appear to be manageable for example, by conducting counterintelligence sweeps or improving cyberdefense capabilities through advanced AI.

Moreover, even if the intelligence recursion is detected early, a failed first strike may leave no time for follow-up before the feedback loop successfully yields superintelligence. There is also the possibility that the clear stakes involved in such a scenario could prompt states to discard the step-by-step approach of the escalation ladder and instead resort to broader hostilities that are more likely to succeed and, paradoxically, increase the very instability which MAIM aims to prevent.

3. R&D capabilities are a dual-use dilemma

It would be much easier to monitor and interrupt a potentially destabilizing AI project if the red line were set earlier—say, at the development of AI with human-level capabilities in AI research and development. But that’s not a realistic threshold. AI systems that match human performance in programming or scientific reasoning are also the kinds of systems we want for entirely legitimate purposes. In fact, it is the stated goal of frontier AI companies like OpenAI and Anthropic to build systems of human-level capabilities across all cognitive domains!

However, developing AI with human-level capabilities in other related R&D domains, like programming, could incidentally result in AI R&D capabilities of the same threshold, especially since progress in one area can spill over into others. This means, for one, that drawing the line at the development of human-level AI R&D capabilities may also halt the development of other capabilities with socially beneficial use-cases. But it also means that even if states agreed not to cross the AI R&D red line, they could do so unintentionally, simply by pursuing powerful models for economic or scientific applications.

4. Detection is harder without significant compute scaling

Many forecasts on superintelligence and an intelligence recursion rightly envision a software-driven course. Hardware bottlenecks like compute scarcity and the implausibility of massively scaling manufacturing capacity make a hardware-led explosion unlikely. In fact, as I mentioned earlier, these same bottlenecks are often cited as viable grounds to question the plausibility of an intelligence recursion altogether. If a recursion is possible, it’s more likely to be driven by rapid software advances in training algorithms, neural architectures, data curation, scaffolding techniques, and so on. Rapid capability improvements would occur on existing hardware. Notably, such a recursion would likely be even faster, as it would circumvent the physical constraints of manufacturing hardware.

This is not good news for anyone hoping to detect that their adversaries have already begun an intelligence recursion. Compute usage/scaling is easily detectable and trackable through surveillance imagery, energy usage and procurement tracking. This explains why compute governance has become a viable tool in AI policy, widely used in export controls, technology diffusion and risk classification. Software advancements, on the other hand, leave no outward trace. This makes a software-driven intelligence recursion exceptionally harder to detect. Thousands of AIs optimizing new methods across various axes of software performance could bring a state within range of superintelligence long before suspicious adversaries realize anything is amiss.

The resultant difficulty in distinguishing ordinary AI development from rapid progress towards a dangerous threshold takes us back to the familiar conundrum of worst-case assumptions, where states rely on their instincts to launch blind maim attacks.

5. Verification agreements may be much harder than we hope

Some of the problems mentioned so far would be alleviated if states simply opened up to their adversaries about their AI developments. To this, Superintelligence Strategy suggests transparency measures such as mutual inspections, and also references the nuclear-era Open Skies Treaty. The idea is that states could agree to verification regimes to ensure no one is approaching the red line without the other knowing.

Here, I think the reference to nuclear agreements as a historical analogue for AI deterrence is somewhat misleading. Verification agreements on nuclear capabilities (globally and between the US and the USSR) came about largely because the world managed to achieve an unprecedented consensus on the existential danger of nuclear weapons. The atomic bombings of Hiroshima and Nagasaki delivered a clear demonstration of the destructive capabilities of nuclear power. That was enough to convince even adversaries that cooperation was necessary to avoid all-round destruction.

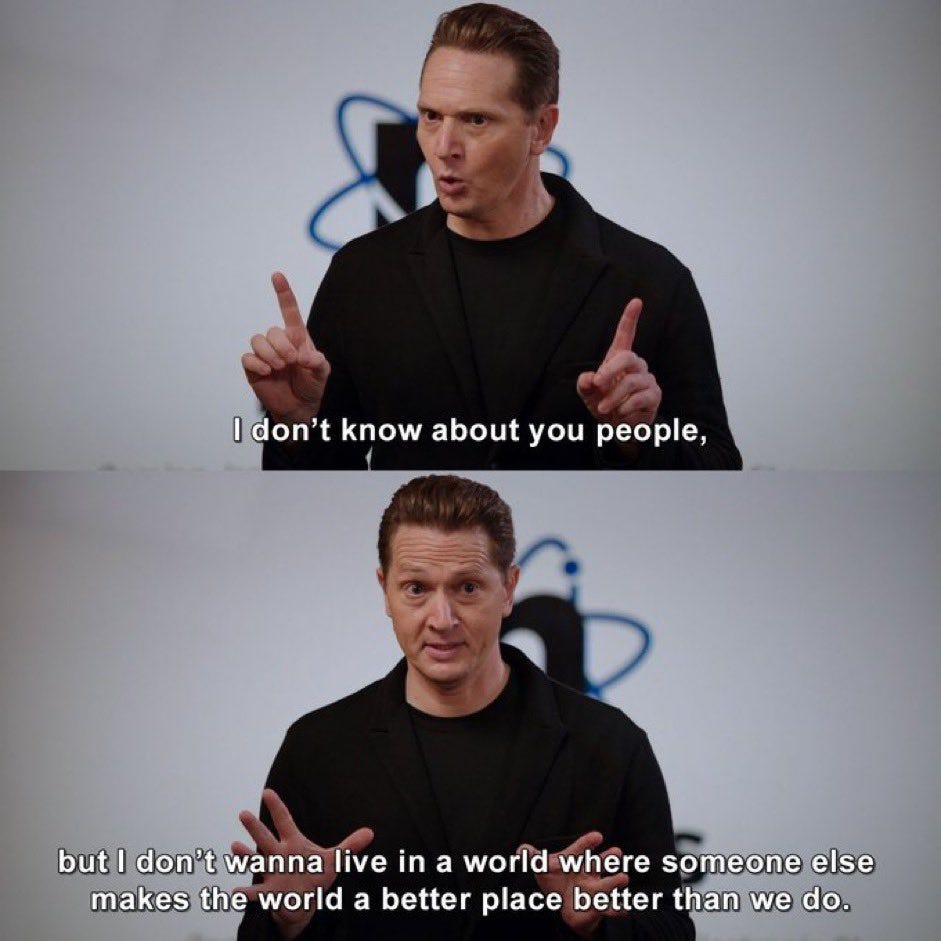

These conditions do not exist yet with AI. We are far from a consensus on AI being existentially harmful or strategically decisive.2 On the contrary, many government officials fuel race rhetoric around AGI, citing fears that China might otherwise leapfrog the US, and urging government to refrain from introducing safety requirements which may encumber the pace of AI innovation in America. In fact, the absence of a major AI disaster has been repeatedly referenced to downplay the need for caution. For example, here is Eric Schmidt, ironically one of the authors of Superintelligence Strategy, speaking in 2021:

“Why don’t we wait until something bad happens and then we can figure out how to regulate it — otherwise, you’re going to slow everybody down. Trust me, China is not busy stopping things because of regulation.”

There is definitely room for risk-reducing cooperation between US and China, especially in areas where the shared benefits seem immediate. But in an environment of publicly reinforced rhetoric from the US regarding AGI-enabled pursuit of hegemony—rhetoric which is visible to China—working together to enable sabotage becomes counterproductive. Likewise, without an unequivocal understanding that scaling AI capabilities is an existential threat, it is unlikely that both states would agree to build conditions specifically designed to enable sabotage of each other’s “justified” ambitions. What’s more likely is that states may perform unilateral sabotage—as they always have—but stop short of proactively helping their adversaries succeed in ensuring their own failure. It is especially unlikely, given the current trajectory of US-China relations and in the event that sabotage is assured, that either state would disclose when they are about to cross the recursion red line.

Final Note

The irony of it all is that, despite what seem to be clear reasons why intelligence recursion may be a flawed red line for deterrence, I would probably still consider it if asked to suggest a red line for mutual sabotage. Fully automated research is, after all, a highly credible path to superintelligence. Perhaps the fact that the most reasonable red line in a deterrence strategy is riddled with so many difficulties points to a deeper problem. The MAIM framework itself may be fundamentally unworkable as a way to deter a superintelligence race.

The emphasis on achieving deterrence from the outset may not seem obviously important. But remember that it is not only the attainment of superintelligence that threatens global stability. A reckless race to superintelligence, in itself, can engender potentially dire consequences like losing control of advanced AI. That’s why deterrence should apply not just to crossing the final red line, but to the destabilizing pursuit itself.

In fact, we are yet to agree on whether superintelligence is dangerous by default, or even on whether the concept itself is truly possible.

It’s wild how we’re basically trying to stop future AIs from playing god by sabotaging each other first 😂 (do we laugh here🌚) But honestly, the recursion part is arghhhhh 🫠